|

✉ jian.xu@ia.ac.cn I am currently an Associate Professor at Institute of Automation Chinese Academy of Sciences (CASIA) in PAL group. Before joining CASIA, I have 3 years of experience in AI corporations HUAWEI and XREAL. I obtained my Ph.D. in Pattern Recognition and Intelligent Systems from Institute of Automation, Chinese Academy of Science in 2020. Previously I received my B.S. in Control Science and Engineering from Shandong University in 2015. My research interests lie in Large Multimodal Models, AI4Science, Pose Estimation and Image Retrieval.

|

|

|

|

|

|

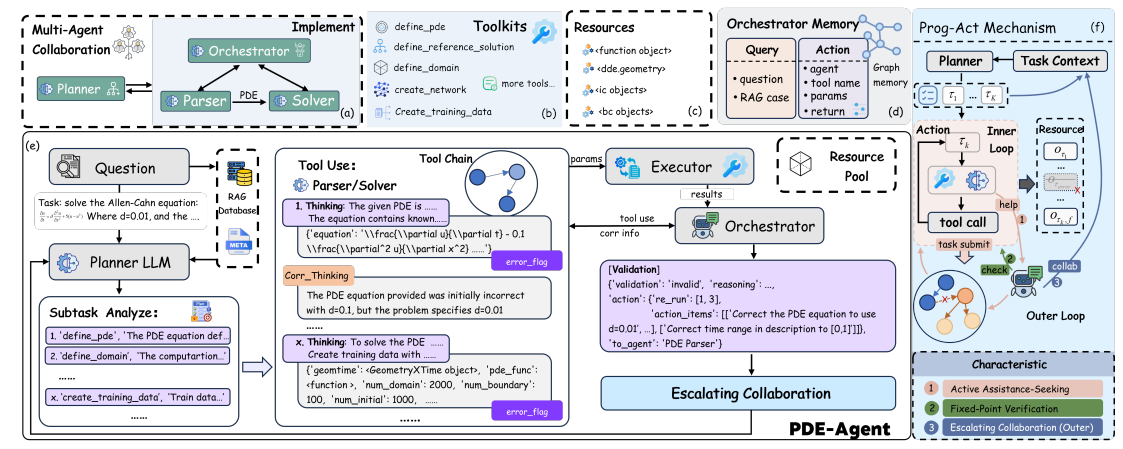

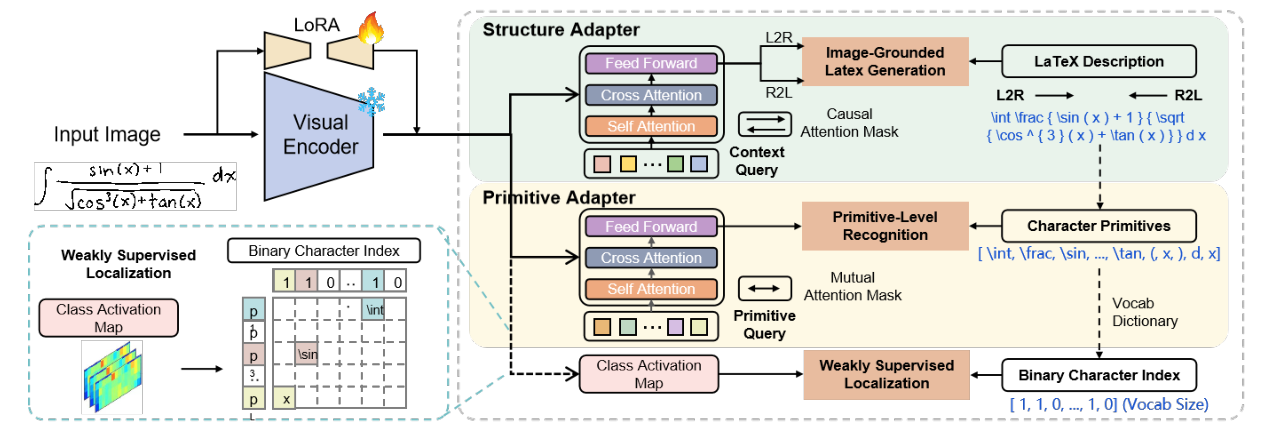

Jianming Liu, Ren Zhu, Jian Xu, Kun Ding, Xu-Yao Zhang, Gaofeng Meng, Cheng-Lin Liu arXiv 2025 [PDF] [Project] |

|

Hang Yin, Yan-Ming Zhang, Jian Xu, Jian-Long Chang, Yin Li, Cheng-Lin Liu IEEE Transactions on Artificial Intelligence, 2025 [Project] |

|

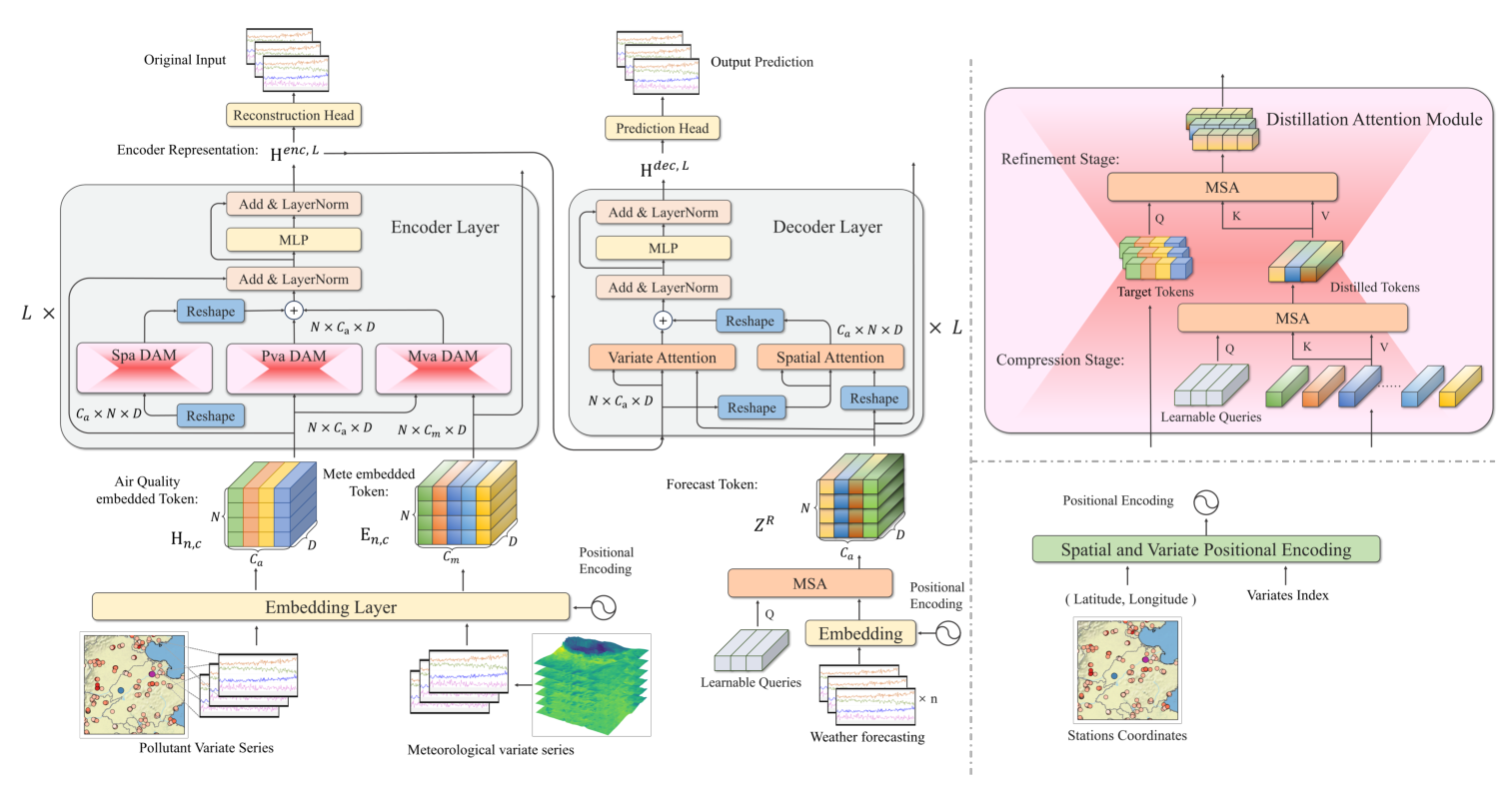

Xinming Wang, Jian Xu, Aslan H. Feng, Yi Chen, Haiyang Guo, Fei Zhu, Yuanqi Shao, Minsi Ren, Hongzhu Yi, Sheng Lian, Hongming Yang, Tailin Wu, Han Hu, Shiming Xiang, Xu-Yao Zhang, Cheng-Lin Liu [PDF] [Project] |

|

Lixin Yang, Licheng Zhong, Pengxiang Zhu, Xinyu Zhan, Junxiao Kong, Jian Xu, Cewu Lu IEEE Transactions on Pattern Analysis and Machine Intelligence, 2025 |

|

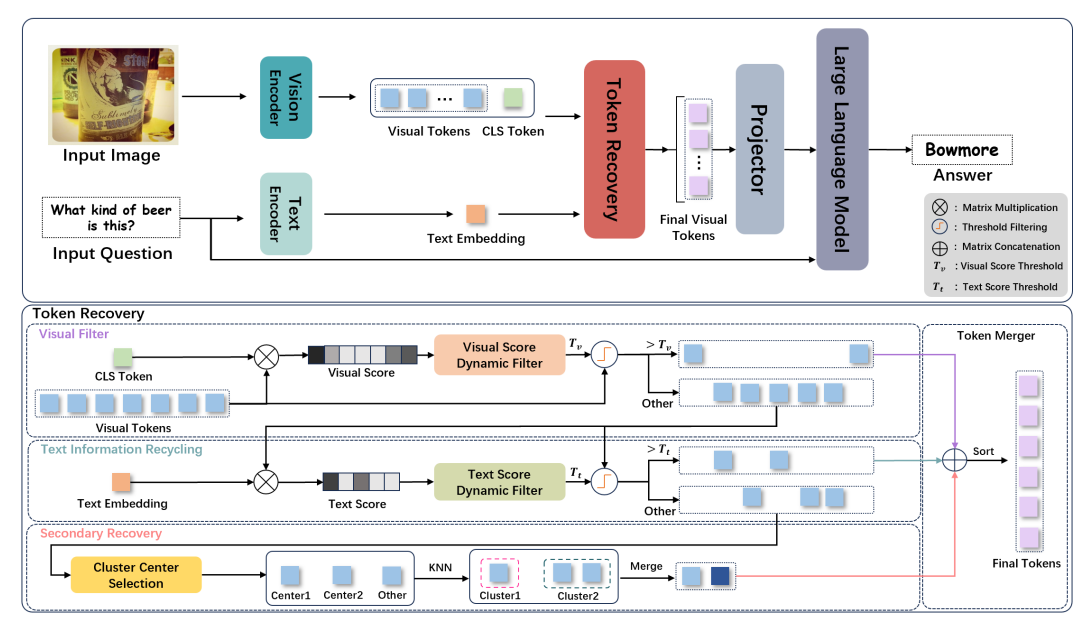

Haiyang Guo, Fanhu Zeng, Fei Zhu, Wenzhuo Liu, Da-Han Wang, Jian Xu, Xu-Yao Zhang, Cheng-Lin Liu ICCV 2025 |

|

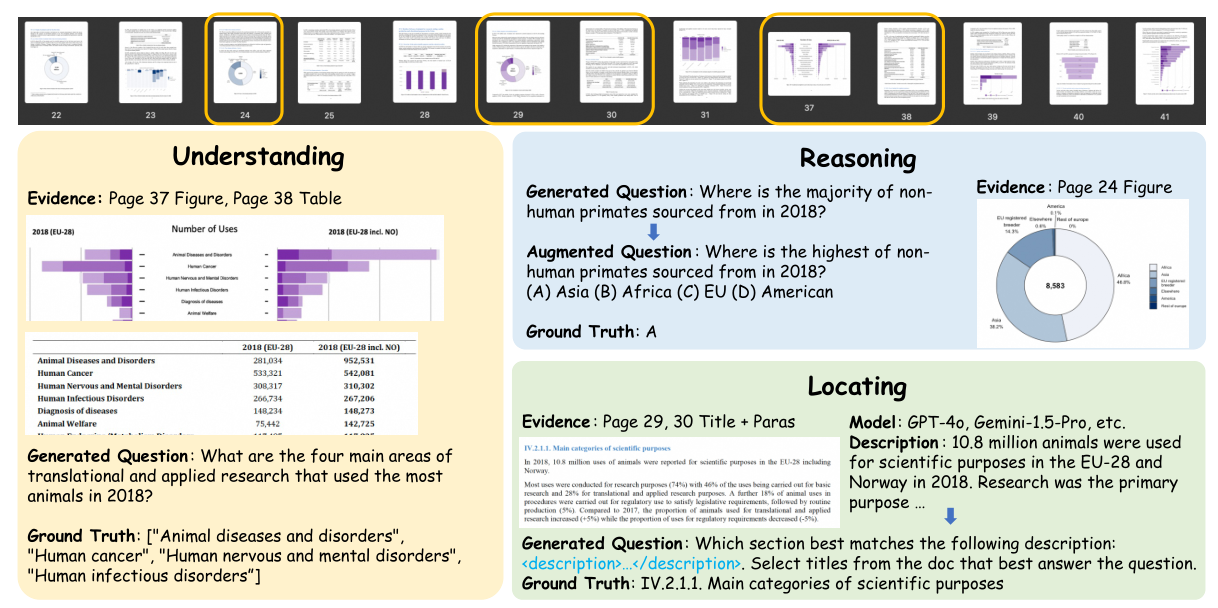

Chao Deng, Jiale Yuan, Pi Bu, Peijie Wang, Zhong-Zhi Li, Jian Xu, Xiao-Hui Li, Yuan Gao, Jun Song, Bo Zheng, Cheng-Lin Liu ACL 2025 Main |

|

Hong-Yu Guo , Fei Yin, Jian Xu, Cheng-Lin Liu ICASSP 2025 |

|

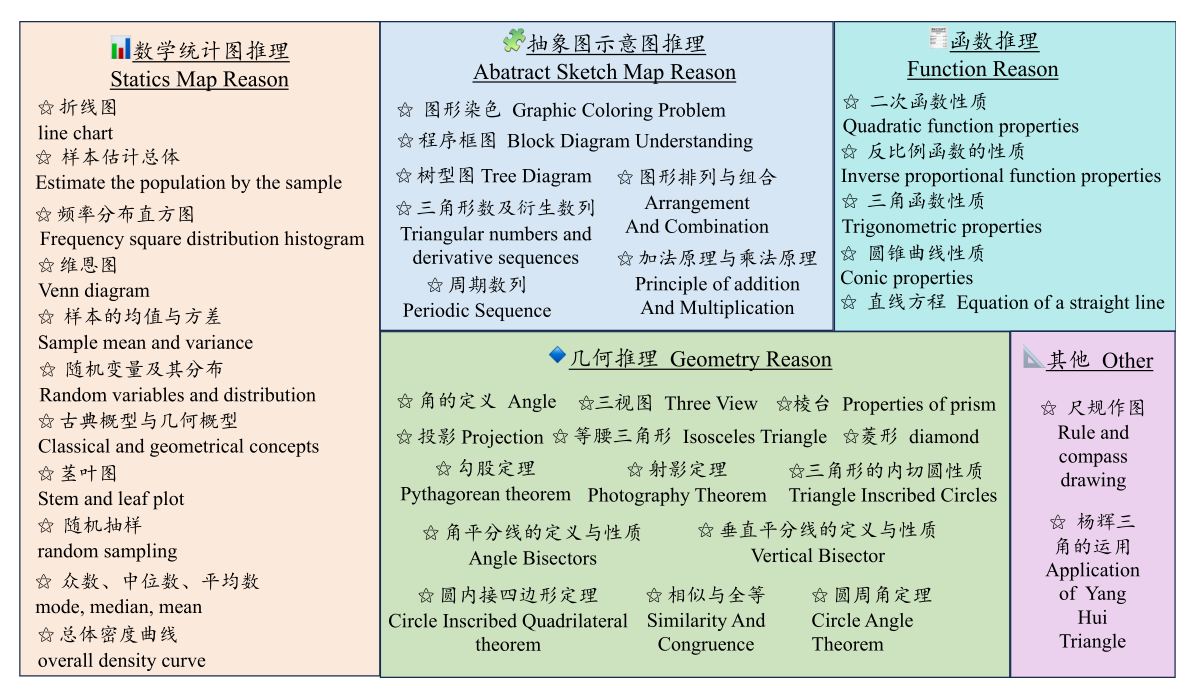

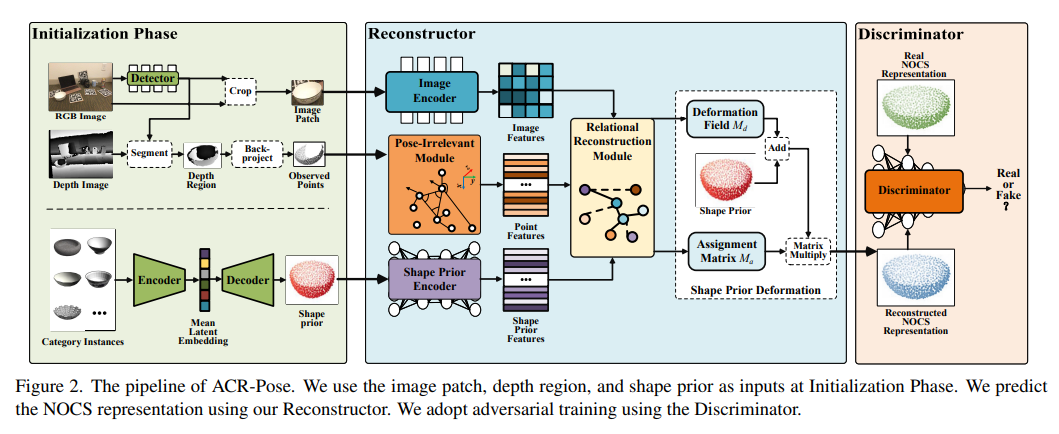

Yi Chen, Jian Xu, Xu-Yao Zhang, Wen-Zhuo Liu, Yang-Yang Liu, Cheng-Lin Liu AAAI 2025 [PDF] |

|

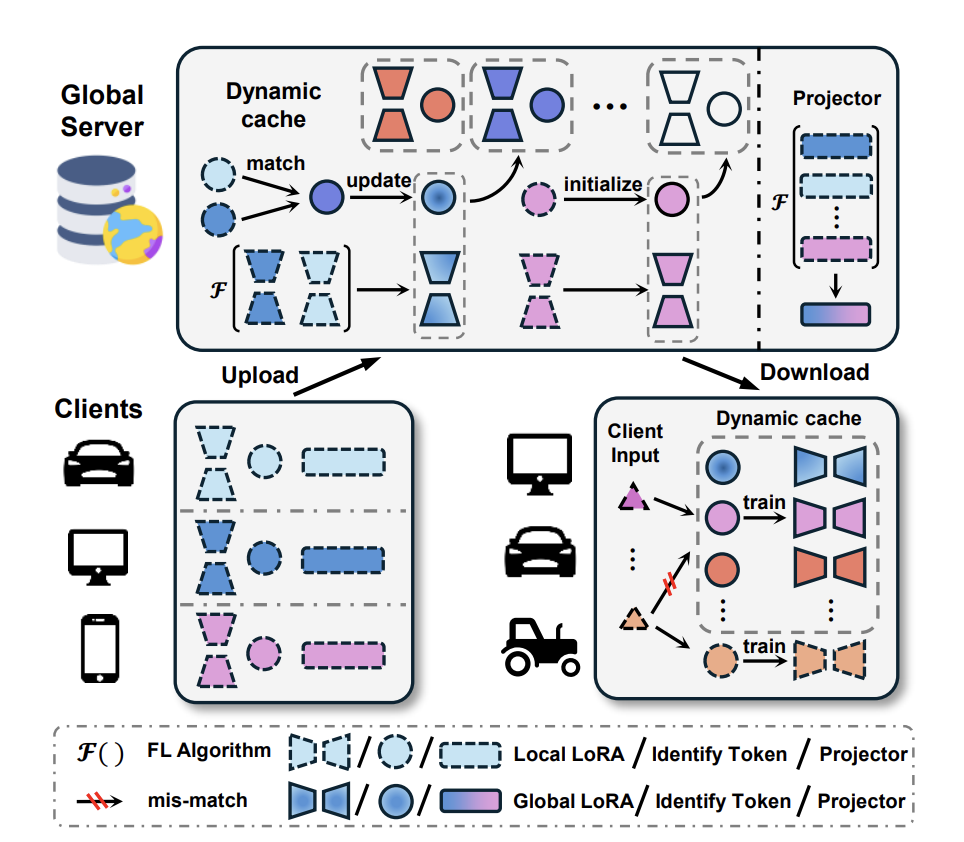

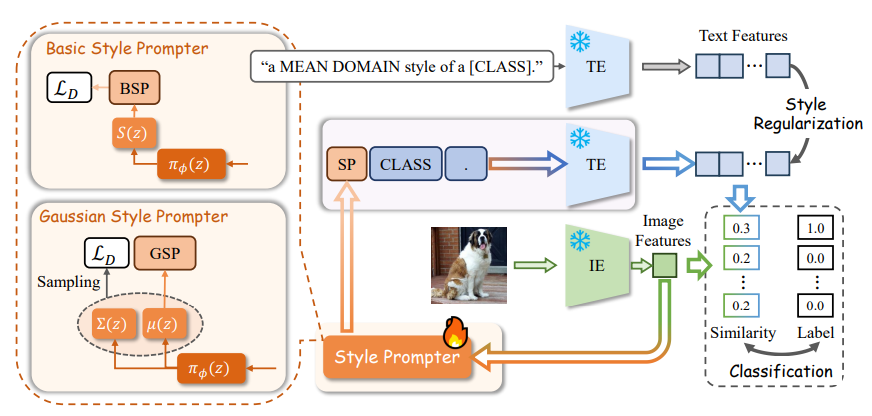

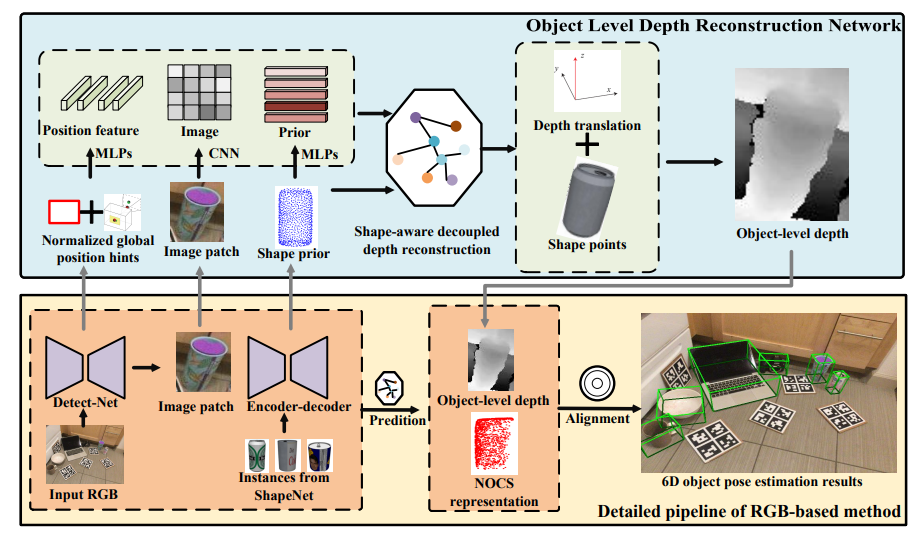

Jiao Zhang, Jian Xu, Xu-Yao Zhang, Cheng-Lin Liu arXiv 2024 [PDF] |

|

Zhongzhi Li, Ming-Liang Zhang, Pei-Jie Wang, Jian Xu, Rui-Song Zhang, Yin Fei, Zhi-Long Ji, Jin-Feng Bai, Zhen-Ru Pan, Jia-Xin Zhang and Cheng-Lin Liu COLING 2025 [PDF] |

|

Kailin Li, Lixin Yang, Zenan Lin, Jian Xu, Xinyu Zhan, Yifei Zhao, Pengxiang Zhu, Wenxiong Kang, Kejian Wu, Cewu Lu AAAI 2024 [PDF] [Project] |

|

Zhaoxin Fan, Zhenbo Song, Zhicheng Wang, Jian Xu, Kejian Wu, Hongyan Liu and Jun He ICMR 2024 [PDF] |

|

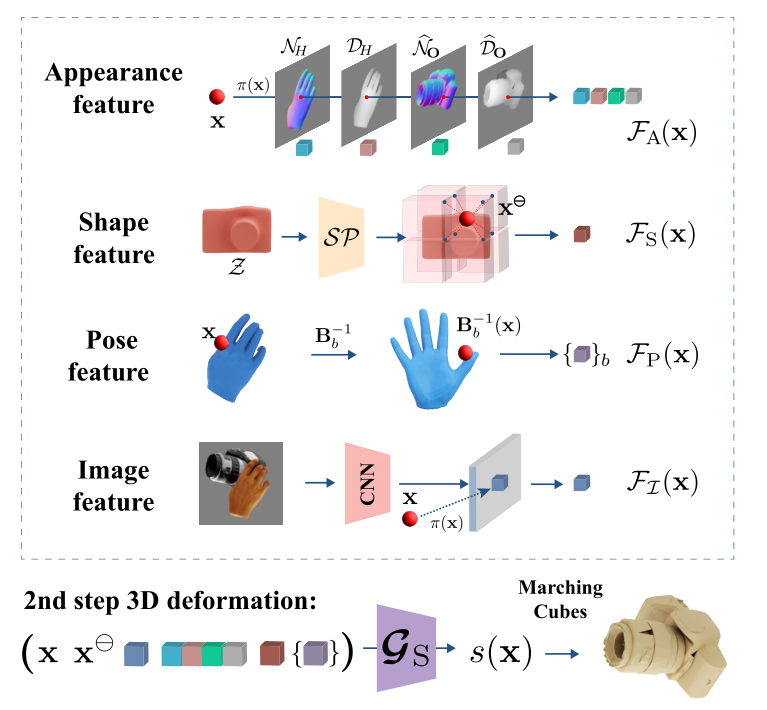

Kailin Li, Lixin Yang, Haoyu Zhen, Zenan Lin, Xinyu Zhan, Licheng Zhong, Jian Xu, Kejian Wu, Cewu Lu ICCV 2023 [PDF] [Project] |

|

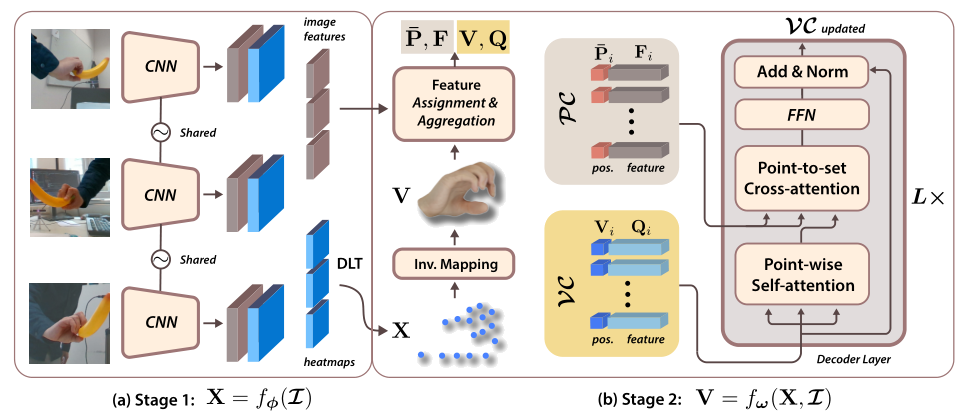

Lixin Yang, Jian Xu, Licheng Zhong, Xinyu Zhan, Zhicheng Wang, Kejian Wu, Cewu Lu CVPR 2023 [PDF] [Project] |

|

Zhaoxin Fan, Zhenbo Song, Jian Xu, Zhicheng Wang, Kejian Wu, Hongyan Liu, Jun He ECCV 2022 [PDF] [Project] |

|

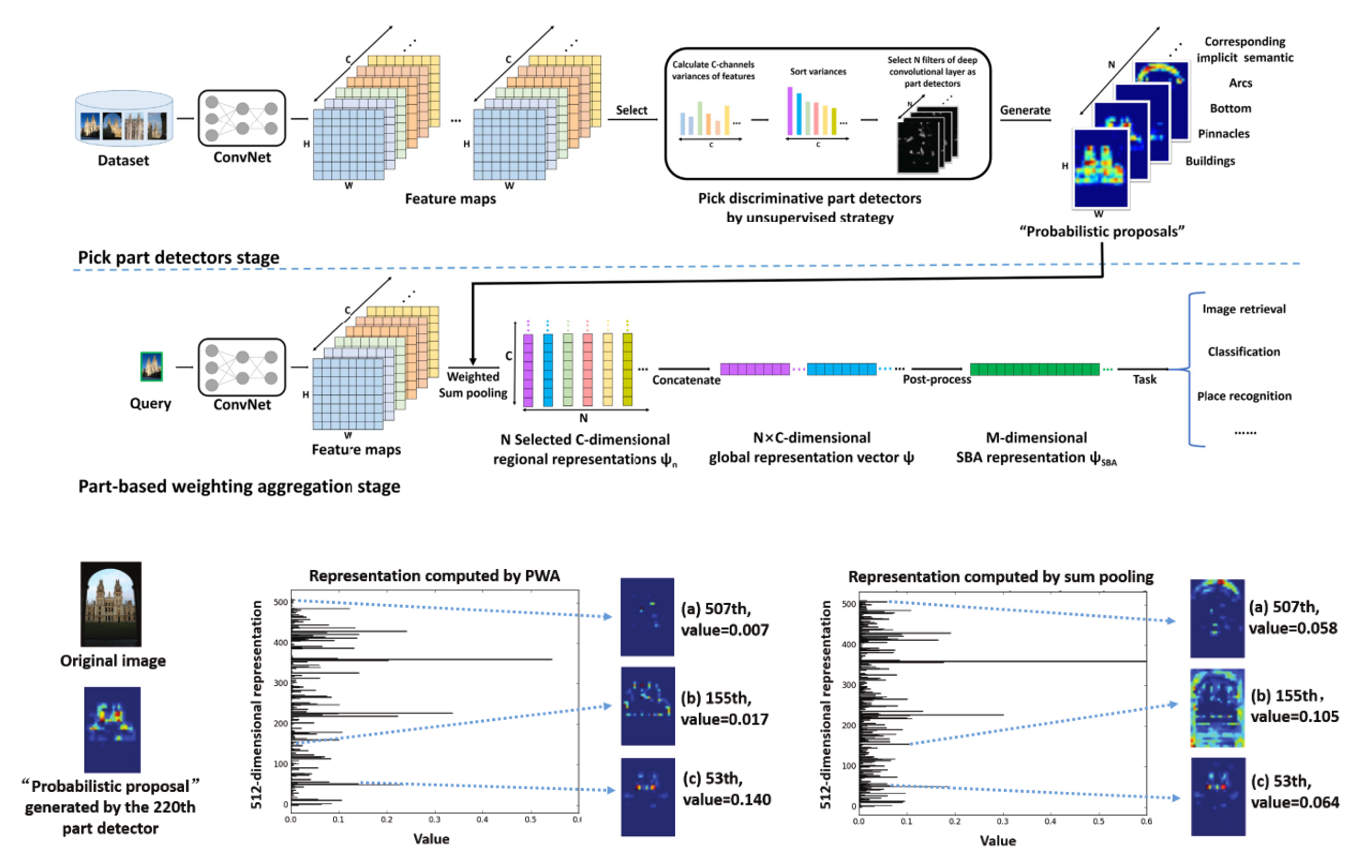

Jian Xu, Chunheng Wang, Cunzhao Shi, Baihua Xiao IEEE Transactions on Image Processing, 2019 [PDF] [Project] |

|

Jian Xu, Chunheng Wang, Chengzuo Qi, Cunzhao Shi, Baihua Xiao IEEE Transactions on Multimedia, 2019. [PDF] [Code] |

|

Jian Xu, Cunzhao Shi, Chengzuo Qi, Chunheng Wang, Baihua Xiao AAAI 2018 [PDF] [Code] |

|

[10] 中国人工智能学会蚂蚁科研基金 “原生多模态大模型交互体验升级优化”, 2025.12-2026.12, 项目负责人。 [9] 国家自然科学基金青年科学基金项目(C类)“多维结构化增强的材料科学大模型构建方法研究”, 2026.01-2028.12, 项目负责人。 [8] 多模态国家重点实验室青年基金 “基于大模型的科研智能体构建方法研究”, 2025.07-2026.06, 项目负责人。 [7] 中国科学院先导A “科学基础大模型”, 2025.02-2027.01, 课题负责人。 [6] 360公司 “面向大语言模型的私域知识注入方法研究”, 2025.03-2025.11, 项目负责人。 [5] 国家自然科学基金重点项目 “基于神经符号系统的数学推理研究”, 2025.1-2029.12, 主要完成人。 [4] 中国科学院先导A “地空多模态甘蔗表型数据智能分析与优异品种选育”, 2023.10-2028.10, 主要完成人。 [3] 北京市科技计划 “AI for Science重点领域研究案例与智能组件研发”, 2023.12-2025.12, 主要完成人。 [2] 2035创新任务 “科学大模型构建理论与方法”, 2024.03-2026.03, 主要完成人。 [1] 华为公司 “时序预测大模型高效微调技术研究”, 2024.05-2025.05, 主要完成人。 |

|

[12] 徐健, 许卓宁,刘成林。甘蔗病害识别方法及装置, 2025-04-17, 发明专利, CN2025104863215。 [11] 徐健, 吴克艰,杨理欣。用于确定手部形态的方法、装置、电子设备、介质及产品, 2023-03-31, 发明专利, CN202310344403。 [10] 徐健, 王志成, 吴克艰。用于输出关键点数据的方法、装置、设备、介质及产品, 2022-12-20, 发明专利, CN202211646062。 [9] 于杲彤, 徐健, 王志成, 吴克艰。用于确定手势类型的处理方法、装置、设备和介质, 2022-12-12, 发明专利, CN202211592961。 [8] 徐健, 王志成, 吴克艰。用于头戴显示设备的控制装置、方法、设备和存储介质, 2022-09-07, 发明专利, CN202211089235。 [7] 徐健, 王志成, 吴克艰。用于虚拟键盘的显示方法和装置, 2022-07-15, 发明专利, CN202210833540。 [6] 徐健, 张雅琪, 刘宏马。图像矫正方法、电子设备、介质及片上系统, 2021-09-01, 发明专利, CN202111020078。 [5] 徐健, 张超, 张雅琪, 刘宏马, 贾志平。目标跟踪方法及其装置, 2021-03-29, 发明专利, CN202110336639。 [4] 张超, 徐健, 张雅琪, 刘宏马, 贾志平, 吕帅林。 一种确定跟踪目标的方法及电子设备, 2020-12-29, 发明专利, CN202011607731, 2023-06-06授权。 [3] 张雅琪, 张超, 徐健, 刘宏马。一种目标跟踪方法及电子设备, 2020-09-30, 发明专利, CN202011066347。 [2] 王春恒, 徐健, 肖柏华。 卫星云图分类方法及系统, 2020-06-12, 发明专利, CN202010024821, 2023-04-28授权。 [1] 王春恒, 徐健, 肖柏华。 图像检索方法及系统, 2020-05-26, 发明专利, CN202010026336, 2023-04-25授权。 |

|

The website template was adapted from Xingyu Chen. |